Tackling the Staking Problem

In 2018 I feared Proof of Stake wouldn't be as decentralised as Proof of Work. In 2022 I'm doing something about it with Obol Labs.

In 2018 I wrote my first article on the centralisation risk of Ethereum's proof of stake proposal. At the time I did not anticipate the post becoming a trilogy.

The premise of "The Staking Problem" was; with a proposed minimum stake of 1,500 ether, and 100% slashing punishments, I felt that only specialised Staking as a Service companies would have the ability to run Ethereum validators, and that sooner or later this centralising and shrinking group would be pressured into compromising the neutrality of Ethereum.

Almost two years later in the summer of 2020, the architecture for Eth2 was much more well-defined, and cryptographic breakthroughs had brought the minimum stake down to 32 ether. I had recently ventured into self-employment during the pandemic and to fill my oh so empty blog site, I wrote a follow up called "The Staking Problem (revisited)".

At the time I had recently landed my first client. I had the pleasure of leading Blockdaemon's Ethereum 2 effort for over a year, where we built one of the largest Ethereum staking deployments in the world to date. During this effort however, I was keen to ensure we didn't inadvertently bring about the compromisable state I had warned about previously.

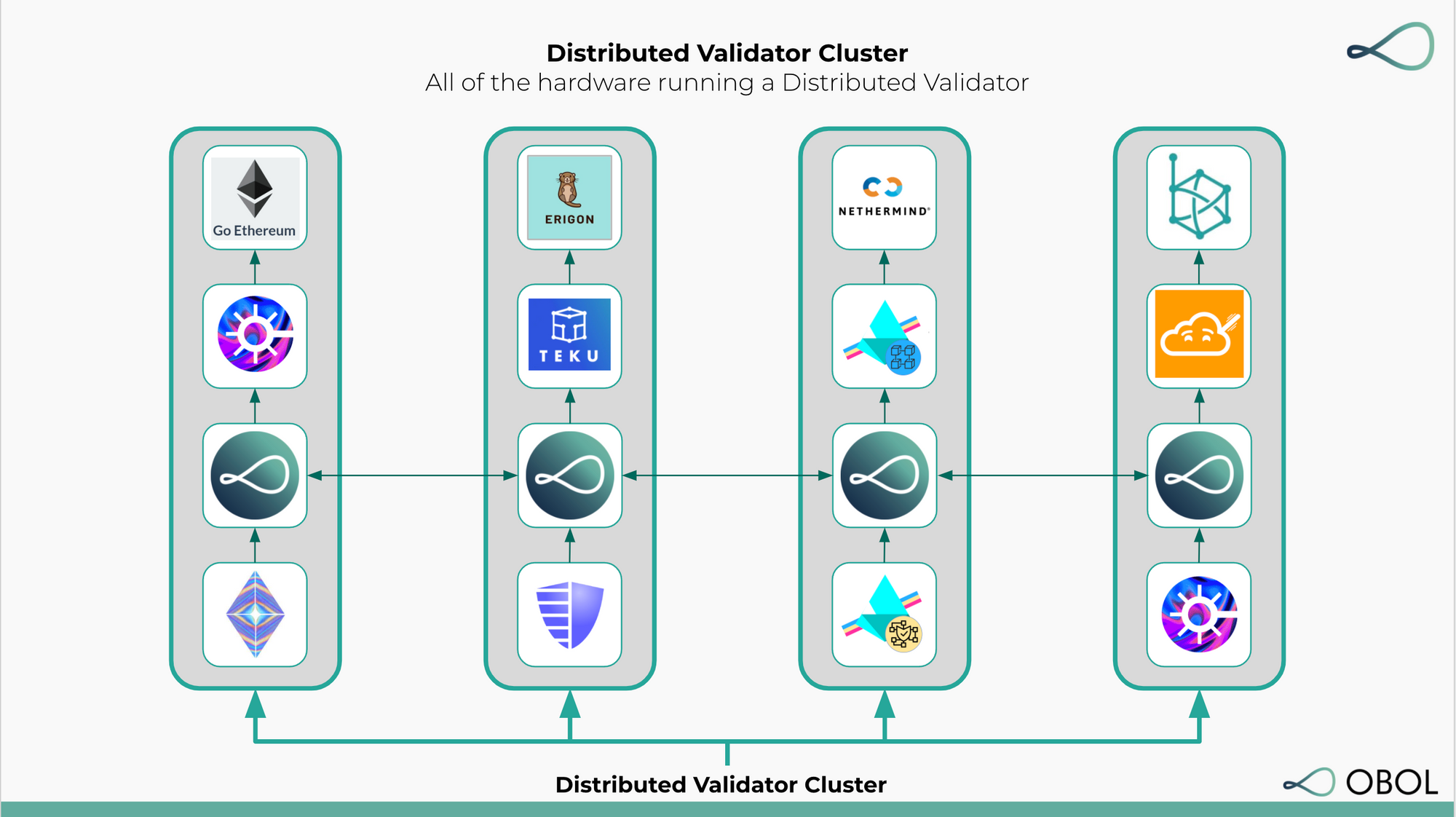

After the beacon chain launch I began building software to further decentralise staking, and swiftly realised I did not know enough about the world of business to pull this off alone. I got re-introduced to Collin Myers, who was working on the economics of protocolising the decentralisation of Ethereum and together we founded Obol Labs. Obol Labs is a research and software development team focused on PoS infrastructure for public blockchain networks. The core team is currently building the Obol Network, a protocol to foster trust minimized staking through multi-operator validation. The Network aims to increase Ethereum's resiliency and decentralisation, by removing the technical single point(s) of failure that each validator client represents using Distributed Validator Technology.

We are going to tackle the staking problem.

Fault Tolerant Staking

Right now, it is too hard to run a backup system for a validator safely. Odds are, you'll blow up and get your validators slashed. Don't try it. What typically happens is, sooner or later both your primary validator and your backup will end up online at the same time and double-sign something. This means your one validator key was running in both places, and signed two messages saying different things to different parts of the network for a given task. The network interprets this as an attack on its ability to come to consensus, and punishes you severely for it.

This model of having two independent validator systems that share the same private key and are meant to operate at different times is called Active/Passive redundancy.

The best advice so far in the staking space has been "just don't run a backup, outages are no big deal". This is not a sufficient solution for a market that will some day measure in the trillions of dollars. Not having a fault-tolerant staking system creates risk for all sizes of staking operators. If you're a solo node operator, you can't be expected to be on call 24/7/365, large operators on the other hand have to decide how many on-call engineers they need if their validator failover process is manual rather than automated. If one machine dies during a shift, no big deal, but if 100 nodes die; can one engineer triage and failover all of them safely and quickly without any coming back to life and causing a slashing?

Something is not right here.

We can do better than this. We believe by removing points of technical failure in validator nodes, we can open a new design space that will enable the next evolution in network validation and staking models.

Collaborative Staking

At Obol, we are researching and building an infrastructure primitive called Distributed Validator Technology. DVT enables a new kind of validator, one that runs across multiple machines and clients simultaneously but behaves like a single validator to the network. This enables your validator to stay online even if a subset of the machines fail, this is called Active/Active fault tolerance. Think of it like engines on a plane, they all work together to fly the plane, but if one fails, the plane isn't doomed.

Obol's mission is to enable and empower people to share the responsibility of running the network. If you are part of a distributed validator cluster, and your machine dies overnight, the other operators in your cluster will have your back. You'll cover for them some other time when they go on holidays for a week and their node falls out of sync. If we can share the responsibility of running nodes, we can open a new frontier of decentralisation.

Solo validators can have backup. Staking firms can share risk and reward. DeFi protocols can diversify their staked ether exposure. Major institutions can hedge cloud provider risk. There's a benefit to everyone for building fault tolerant, distributed validator tech.

The Staking Problem

So how does high-availability validators help stake centralisation Oisín?

Here's my take:

Right now you take a massive bet on the person/team that is running your validator for you. If they do everything right, they make you a couple percent of interest a year, if they do everything wrong, they lose it all.

The decentralised staking industry is extremely nascent, and we haven't figured out how best to build trust-minimised staking for the community. Projects like Lido pool risk across everyone, projects like RocketPool isolate risk into individual pools. One gates entry with humans and votes, the other gates entry with tokens and bonding.

My belief is if we can remove the single point of failure in validator operation, we can place more trust in smaller node operators. I believe a DAO wouldn't trust a single member to stake it's treasury's ether, but a DAO might entrust a group of members to run validators together with shared accountability.

You and your buddies might not have 32 ether alone, but together you could go splits on a validator as a group, and all share the reward.

A custodian might not trust a single operator to stake their client's ether, but they would trust a group of operators collaborating together.

If we can share risk, we can share stake. If we want to solve the staking problem, we need to make Ethereum staking safe and profitable for groups of humans together.

This is coordination technology after all.