Performance Testing Distributed Validators

Earlier this year, we worked with MigaLabs, the blockchain ecosystem observatory located in Barcelona, to perform an independent test to validate the performance of Obol DVs. We’re happy to share the results of these performance tests.

In our mission to help make Ethereum consensus more resilient and decentralised with distributed validators (DVs), it’s critical that we do not compromise on the performance and effectiveness of validators. Earlier this year, we worked with MigaLabs, the blockchain ecosystem observatory located in Barcelona, to perform an independent test to validate the performance of Obol DVs under different configurations and conditions. After taking a few weeks to fully analyse the results together with MigaLabs, we’re happy to share the results of these performance tests.

📝 You can find the full report here:

Background

The main goal of the performance tests was to compare the performance of distributed validators with traditional (or non-distributed) validators. Since Charon operates on a P2P network, it is critical to ensure that the communication between DV nodes does not affect the validator’s performance by delaying the broadcast of signed duties. MigaLabs tested Charon in its standard configurations of 4, 7, and 10 nodes. Additionally, we wanted MigaLabs to stress test Charon for extreme cases with different geolocations, beacon client configurations, and hosting services to see how performance was affected by the distribution and setup of nodes within a DV cluster. We also wanted to see how DVs performed as we scaled up the number of validators within a cluster, with MigaLabs ramping a single DV cluster to as high as 3000 validators.

To ensure that enough data was collected to provide a robust statistical analysis, MigaLabs performed a long, multi-phased experiment that lasted for a total of 10,000 epochs (or 1.5 months) of continuous execution. This performance test was run on the Ethereum Goerli testnet.

To analyse the performance, the following indicators were measured and compared:

- Hardware resource utilisation of nodes

- Latency between nodes within the same cluster

- Attestation submissions and block proposals

- Total rewards obtained

Result Highlights

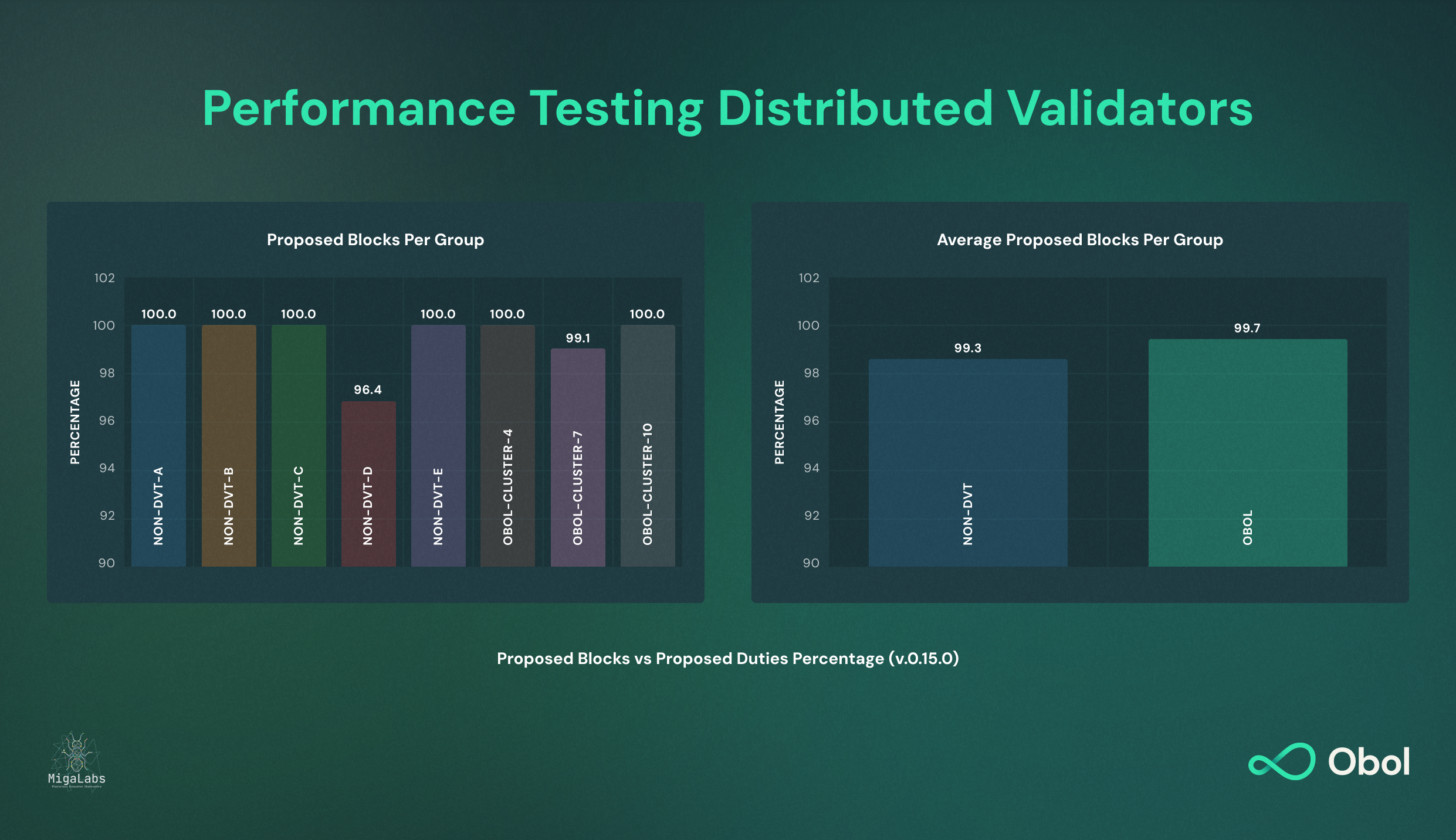

Overall, MigaLabs found that for all of the measured indicators the performance of DVs were comparable with traditional, non-distributed validators. For critical indicators like attestation duties, block proposals, and achieved rewards, the difference between Obol DVs and traditional validators was under 1%. For block proposals, the performance of DVs was observed to actually be slightly better than traditional validators (after the release of v.0.15.0), though the difference could be attributed to sampling variance.

Additionally, Obol DVs also demonstrated other positive attributes. The tests showed that even with the additional software for Charon, Obol DVs can still operate well on hardware with limited resources. The latency between nodes was also decent, even for clusters with nodes distributed across the world (this also showed 40%+ improvement after the release of v0.15.0). The inclusion delay of Obol DV clusters showed that 70% of attestations were included in the next slot (with 90%+ in the first five slots), which is much better than the network average on the Prater testnet.

Perhaps the most surprising finding was that during the scaling test, the performance of Obol DVs actually improved once the number of validators were increased to several thousand (further analysis of this is needed to fully confirm this finding).

Takeaways & Next Steps

The findings of these independent performance tests were very promising to the progress we are making towards our V1 launch. In our mission to improve the resiliency, security, and decentralisation of the Ethereum network, we need to ensure that DVs can match the performance of existing validators and cater to the needs of all types of validators; no matter how small or large, running a single validator on a small server in the living room or thousands of validators in a data centre, or running multiple nodes in a single location or nodes across the world. The performance of Obol DVs during these tests gave us more confidence that we are on track to achieve our goals.

With that said, there is still much more that needs to be done ahead of our V1 launch. We will be looking to continue our preparation by including a bigger part of our ecosystem to test Obol DVs in various real-world environments to further validate their performance, security, and accessibility. More exciting updates to come! Stay tuned 👀